robots.txt: How to resolve a problem caused by robots.txt

robots.txt file that informs Google what pages, URLs, or URLs they crawl. robots.txt format allows Google to crawl pages or not.

What exactly is robots.txt?

The majority of Search engines, such as Google, Bing, and Yahoo, employ a particular program that connects to the web to gather the data on the site and transfer it from one site to another; this program is referred to as spiders, crawlers bots, crawlers, etc.

In the beginning, internet computing power and memory were both costly; at that time, some website owner was disturbed by the search engine's crawler. The reason is that at that time, websites were not that successful for robots to, again and again, visit every website and page. Due to that, the server was mainly down, the results were not shown to the user, and the website resource was finished.

This is why they came up with the idea of giving search engines that idea called robot.txt. This means we tell which pages are allowed to crawl or not; the robot.txt file is located in the main file.

When robots visit your site, they adhere to your robots.txt instructions; however, if your robots cannot find your robots.txt file, they will crawl the entire website. If it crawls your entire site, users may encounter numerous issues with your website.

User-agent :*

Disallow :/

User-agent: Googlebot - Google

User-agent: Amazonbot - Micro office

The question is, how will it impact SEO?

Today, 98% of traffic is controlled by Google; therefore, let's focus on Google exclusively. Google gives each site to set a crawl budget. This budget determines the amount of time to spend crawling your website.

The crawl budget is contingent on two factors.

1- The server is slow during crawl time, and when a robot visits your site, it makes your site load slower during the visit.

2- How popular is your website is, and how much content on your site? Google crawls first, as the robots want to stay current, which is why it crawls the most popular websites with more content.

To use you use the robots.txt document, your site should be maintained. If you want to disable any file, you could block it by robots.txt.

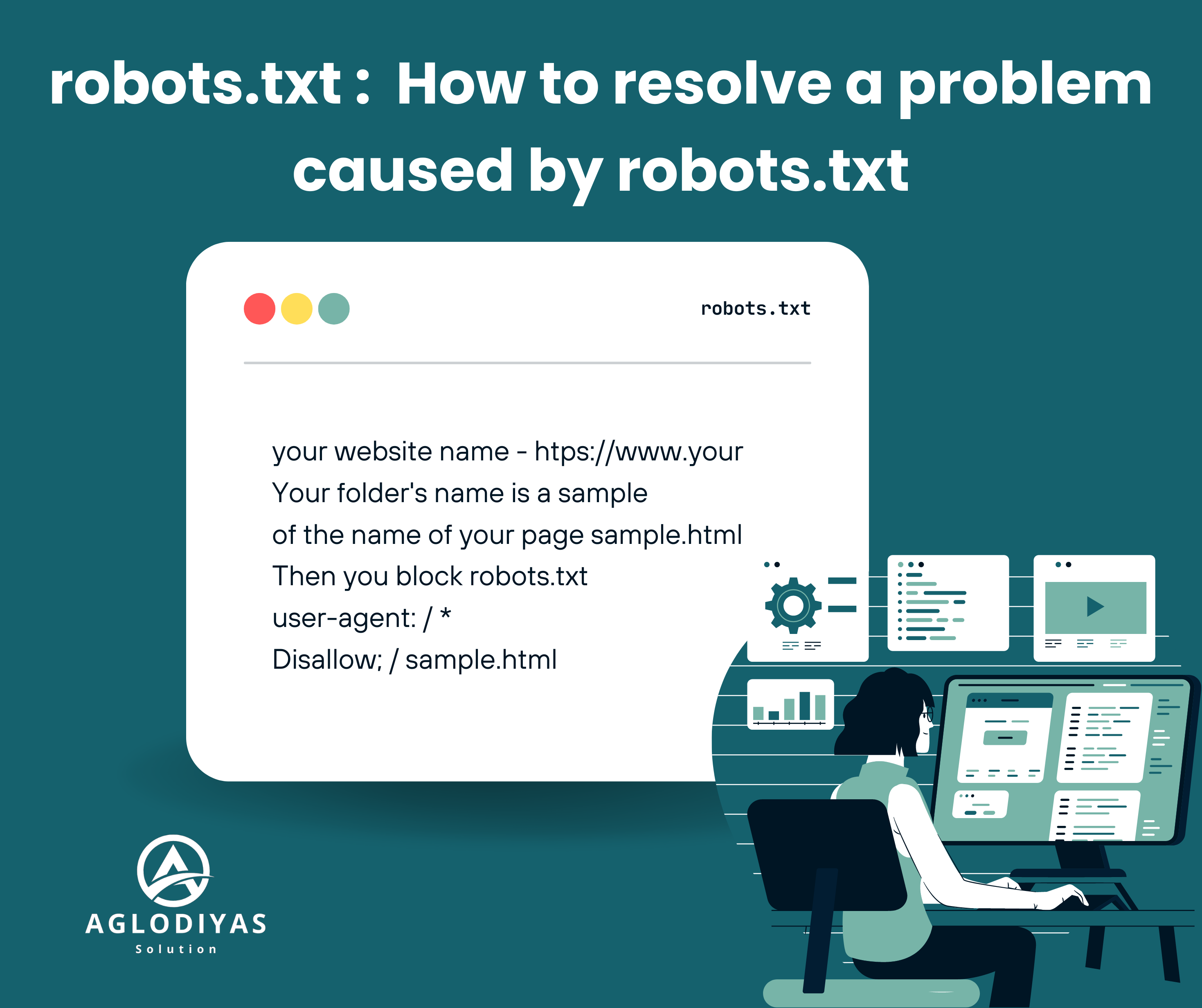

- What format should be used for this robots.txt file?

If you'd like to block the page with information about your employees and prevent the same information from being crawled, you can block the crawling, then seek help from your robots.txt file.

For instance,

your website name - htps://www.your

Your folder's name is a sample

of the name of your page sample.html

Then you block robots.txt

user-agent: / *

Disallow; / sample.html

- How do I fix a problem caused by robots.txt?

If you find that the Google search console appears as blocked robots.txt within the category called Excluded and you're worried about it, there is a remedy. If you are a friend, when you see that the Google search console appears as blocked robots.txt, it indicates problems with your websites or URLs.

Let's find out how to fix this issue.

- Visit your blog

- Click the settings

- Click on the custom robots.txt

- Turn on

- and copy and paste the robots.txt and paste the robots.txt

- and then save.

How do you get your website's robots.txt file?

- Visit this Sitemap Generator

- paste your website URL

- Click on generate sitemap

- copy the code from below into the sitemap.

- And copy and paste it into your robots.txt section.

User-agent : *

Searching is blocked

Disallow:/ category/

Tags Disallow: tags

Allow:/

After these settings, go to the custom robots header tags

- Allow custom robot header tags for your robots.

- Click on the home page tags. switch on all tags, noodp

- Click on the archive, then search the tag page. Turn on noindex or noodp

- Just click the link to open it and tag the page. Turn on all Noodp

After completing this step, Google crawlers index your website, which takes a few days or weeks.

What is the process behind the Google bot function?

Google bots will browse your website and locate it in the robot.txt file. It will not visit pages that are not allowed, but those that are permitted will be crawled before being indexed. Once it has completed the indexing and crawling, it will rank your site on a search engine's get position.

How do you check your website's robots.txt file?

It's accessible to Search for keywords in the Google search engine.

Type :

site: https://yourwebsite.com/robots.txt

In the

In the above article, I tried in my very best attempt to describe what exactly robots.txt is and how it could affect SEO, what's its format for the robots.txt file, how to resolve issues caused by robots.txt, How to obtain your website's robots.txt document, as well as finally, what exactly is Google bot function. robots.txt is required to provide the directions for the Google bot.

I hope I removed all doubts and doubt through my post. If you want to make any suggestions in the article, you're free to provide suggestions.

Website Link- aglodiyas.blogspot.com

Questions: What is a robots.txt file used for?

answer : Robots.txt is a text file that instructs the search engine's crawler which pages to crawl and which not.

This file is required for SEO. It is used to provide security to a webpage.

For example, Banking Websites, Company Login Webpage.

This text file is also used to hide information from crawlers. These pages are not displayed on search engines when someone searches for a query about your webpage.

questions: Is robots.txt good for SEO?

Answer: Robot.txt files help the web crawler Algorithm determine which folders/files should be crawled on your website. It is a good idea to have a robot.txt in your root directory. This file is essential, in my opinion.

question: Is robots.txt legally binding?

Answer: When I was researching this issue a few decades ago, cases indicated that the existence or absence of a robots.txt wasn't relevant in determining whether a scraper/user had violated any laws: CFAA. Trespass, unjust enrichment, CFAA. Other state laws are similar to CFAA. Terms of service are important in such determinations. Usually, there is terms and conditions of service/terms.txt.

If You Have Any Doubts, please let me know